Image credit: ServeTheHome

Overview

Hey everyone, this will be a quick overview of how I use HDDs with multiple cache pools. A few notes before we begin:

- I’m not suggesting ditch your SSD cache entirely, I haven’t done that on any of my servers. I use both HDD and SSD in cache pools.

- SSD endurance is something that should be on everyone’s radar. If you can limit writing to your SSD, it will last longer. Sure, there are high endurance SSDs (and I always recommend them), but they are often more expensive than “normal” SSDs. Also, there’s no point in writing certain files to a SSD cache if the performance will be the exact same writing to a HDD cache instead.

- HDDs generally sequentially read/write much faster than GbE, meaning this is ideal for large files such as media.

- Smaller 10k/15k RPM HDDs in RAID0 are plenty fast for unpack directories. They are cheap and will prevent SSD wear.

- Reducing the responsibilities of your SSD cache will increase its performance.

- HDDs are cheaper per TB than SSDs by a huge margin. Hell, the 1TB drives I use in the guide were free with the HP S01 I recently bought.

- This won’t work for every use-case, so adapt it to your personal needs.

- This is a good time to review how cache works on Unraid.

Check out this video: https://www.youtube.com/watch?v=X0hX1cDP_1w

Quiz yourself: do you know what the following mean?- Cache: Yes

- Cache: No

- Cache: Prefer

- Cache: Only

Hardware recommendations:

Literally any HDD will work for bulk file ingest, even standard consumer ones. I use 1TB WD Blues for Server 1, because that’s what I had handy and that server is SATA only. I don’t store anything important there, period.

Another option is cheap, small, but fast 2.5" SAS 10k/15k RPM drives. These are great for use in single drive, BTRFS RAID0 (stripe) or RAID1 (mirror) depending on what you want to use them for. Many people have been using them in RAID0 for an unpack directory, as to not thrash their main SSD.

Server 1

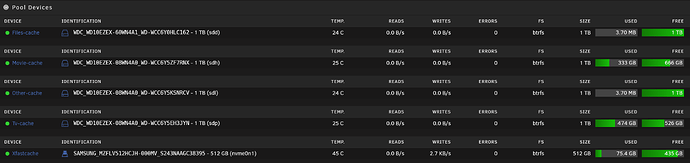

I have a lot of hard drives in this server. I spin down drives when not in use to save power. Honestly the server isn’t used that much, it’s basically a media archive. Out of 28 HDD (+2 parity) only about 3-5 are spinning daily. I use multiple HDD cache pools + CA Mover Tuning plugin to reduce drive spin up even further. I keep the HDD cache pools spinning, and there’s one for each of my shares (another reason to not just use a single large share called “media”).

This is how the share is configured, we’ll use TV as an example:

![]()

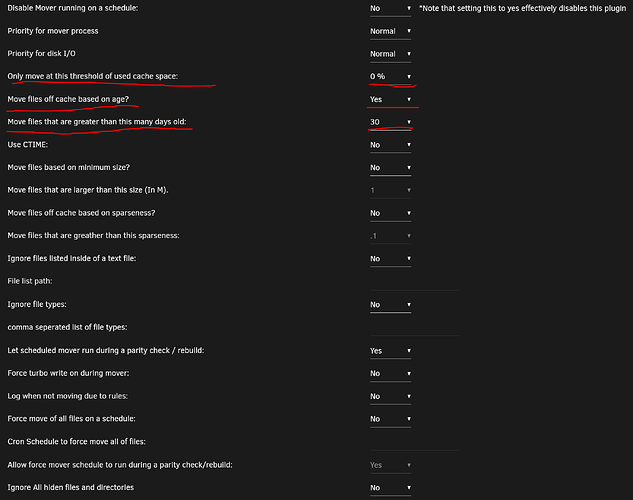

Basically, anything that was ingested on the server within the past 30 days stays on the HDD cache pools. After the file has been there for 30 days, it gets moved to the main array and “archived”. It’s still accessible obviously, but it’s on the main array. Here’s the CA Mover Tuning config that I use:

As I said in the overview, I use this in conjunction with SSD cache. The following shares should be configured as Prefer:SSDcache, and should never live on the array:

- appdata (apps will be extremely slow on the array)

- domains (want a slow VM? put it on the array)

- system (ever have your web interface go down during a parity check?)

Server 2

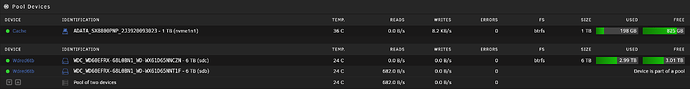

This server is my Unraid Gaming machine. I don’t do anything fancy with CA Mover Tuning, but I do have a HDD cache pool. This one is a pair of WD Red 6TB in BTRFS RAID 1 (mirror). I use it as large file ingestion, but I move content off of it daily. I also use it to store a large library of games that I don’t want on my array, by using Prefer:HDDcache on that share. It also has a VDisk for my gaming VM that is for large bulk game storage on steam - games that aren’t affected by loading time, basically.

![]()

Conclusion

HDDs are useful tools in cache pools due to their size:cost ratio. You won’t burn them up by writing large files to them constantly like you would an SSD. This is a relatively new feature of Unraid, and I see a lot of people aren’t using it yet. HDD cache isn’t ideal for every situation, but most would benefit from adding at least one to their setup.

If you have any questions, feel free to ask!