The only reason to merge them is convenience. Also, if I were to build a separate PC and follow this guide so I could do some remote gaming, I would have to purchase another Unraid license. That would be another reason to merge them.

However, if I were to get marginally better performance having them separate, then I’m absolutely down to do that.

The goal is just remote gaming then just put parsec on a gaming pc and remote into it. It doesn’t have to be a vm on unraid to do that

That’s true actually. Thank you for the advice! Time to get started.

Thank you for the info. Are these instructions still valid in 2024?

@stuffwhy to the rescue again. Much appreciated as always.

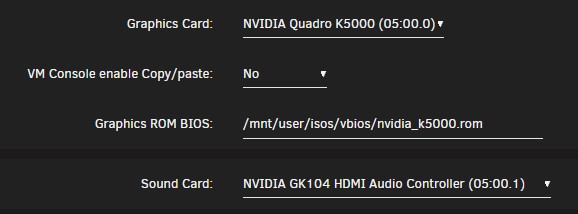

I have spent most of the weekend trying to bind my NVIDIA Quadro K5000 to my Windows 10 or 11 VM’s. No can do! Everytime I choose the card from the VM’s graphic card dropdown menu and restart the VM it just won’t boot into the OS.

I lose the ability to remote into the machine and obviously my Plex Media server is not accessible either. I don’t know what I am doing wrong.

I posted on the UR forum as well with screenshots

https://forums.unraid.net/topic/162621-help-needed-with-gpu-passthrough/#comment-1408004

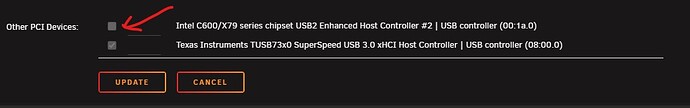

Seems like you haven’t isolated the gpu and its audio component in System Devices

1 Like

I have already checked both these boxes of that is what you are referring to

Oh. Good. Your Unraid forum post did not reflect that that I saw. And you’ve restarted since checking those boxes? That should do it. In my experience, nvidia cards don’t even need their bioses after about Unraid 9.10 but that will probably vary a lot. Other than that not too sure - I’ve done several VMs following the SB guide very very closely and had success.

1 Like

Yes of course I did reboot but having those two boxes checked or unchecked did not help at all. What I find odd is that I do not see the Nvidia PCI devices when editing my VM in the Other PCI Devices section.

I had no trouble isolating two seperate USB controllers which I am using one in my Windows 10 VM and another in a Windows 11 VM. But I simply cannot passthrough the GPU.

I don’t think they show in “other” devices… they’ll show under Graphics or Sound as appropriate

1 Like

Yes they are visible in the dropdown menu. However, once I select them and restart the VM I can restart it but it no longer accessible via Remote Dektop or pinging the VM times out. I tried with and without the ROM file as well to no avail.

Reverting to virtual card sets everything right again. The GPU passthrough was one of the main reasons that I decided to move away from ESXi and give UR a try.

What do the logs say for the unsuccessful boots

Where can I access that log file. Perhaps I dod not mention that the NVIDIA card appears in the VM dropdown menu even if it not isolated (Checked) in System Devices.

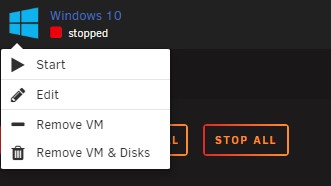

As per the screenshot you can see that the VM does start but again not accessible / reachable.

click the windows logo icon there and pick logs

That’s strange because that is what I did before asking where the log file could be found. I just tried againand this is all that I am presented with. VM stopped

Sorry I just realized that I had to start the VM first in order to see the log option. Brain freeze sorry about that.

This is the log without the Nvidia isolated in System Devices and the VM started

Log not isolated

This link should lead you to some log results that I gathered with and without the Nvidia card having been isolated. Please let me know if you have any issues accessing those log files. Thank you so much for taking the time to assist me. I’m feeling pretty frustrated right now.

https://mega.nz/folder/t54HkICK#nXcvgAsP7719aoxUtPmJug

@stuffwhy Never mind. After much misery and brain overload a light bulb just came on in my mind. In one of my previous replies I had mentioned that prior to assigning the GPU to the VM I was able to remote desktop into the VM using its IP address.

For some reason I decided to attempt accessing the VM via remote desktop but this time using its machine / netbios name instead. Go figure. All is good now.