Yes that sounds like a DAS (direct attached storage) and that’s a thing you can do with those external facing ports.

Thanks. Not sure if this is the right thread to ask, but can I power-on two PSUs synchronously? Or is it best to keep both units PSUs completely separate, and power-on one first, then the other?

You can power on two at the same time, there’s some involved wiring.

Covered in one of the DAS guides [Build Complete] - 20 Bay L4500 DAS w/ Linked PSUs

Looking at building my first NAS, with TrueNAS or OMV, and hoping to experiment with docker stuff on top of it. I was looking at using this as a sort of template, since a lot of the prices seems a bit out of date.

For the CPU, the 8100 seems to be closer to $25-$30, but the 8300 and 8400 aren’t far away, with the 8400 being achievable for $45-$50. I also saw that the AMD 3100 can also be bought for not too much, like $50. Obviously, it would need a different motherboard, but seems to have a lot more, and faster, PCIe lanes.

For the motherboard, does it really matter as long as it’s compatible with the CPU? Seems like I can get ATX and mATX motherboards for <$50, including M.2 and whatnot. Some I’ve seen: GIGABYTE Z370 HD3, GIGABYTE B360 AORUS Gaming 3 WIFI, ASUS PRIME H310M-A, ASUS PRIME H310M-Dash, ASRock Z390 Taichi, but I wasn’t sure if there’s any reason to avoid certain ones. They all seem viable, but I wasn’t sure if anything other than I/O is a concern.

I’m new to building a NAS server, but have built four WIN desktop/servers over the past 20 years. Haven’t decided between UNRAID or TRUENAS, but I have six identical 18TB HDD, so TRUENAS is viable.

Have purchased a JONSBO N5 case, which is phenomenal for space, and includes provision for EATX, ITX, MATX and ATX form factors (with a number of optional “iron column” placement options).

I have also received my X11SCA-F motherboard from China (in pristine condition - looks new). Having said that, I’m looking to improve the Network speed to 10GB and am looking at the X11SPW-TF motherboard. It seems to spec out as a superior board (for only $25 more), but it appears that there are no CPU choices that support QSV. I’ve confirmed that the JONSBO will accommodate the board.

So I have two questions:

- What is the “best” CPU option for the X11SCA-F board if one is primarily using the NAS as a PLEX-server? Transcoding is not a real issue as I maintain the bulk of the content in an MKV container (.H265/HEVC or .H264/AVC encoded), but it would be helpful. I will be installing ECC UDIMM Ram. I’m looking at the i3-9300 (65W) or i3-9300T (35W), but there are some great prices in China for XEON E2278G (80W) which can accommodate 128GB of DRAM.

- Same as above for the X11SPW-TF. Do its benefits outweigh the deficit of the lack of QSV support?

Thanks in advance!

New to the forum. I really appreciate all the resources it offers.

I’ve been doing lots of research on what parts would work best for planned use.

Already have a Jonsbo N3. Currently have 10TB drive almost full with photos and videos.

Just wanted to confirm, parts in NK 6.0 contunue to apply?

Use case:

- Total users at on time (occasionally) 3.

- Store photos, videos, documents.

- Remote access to transfer photos and videos while on vacation?

- Be able to edit photos/videos from nas.

- Store Blue Iris recordings (which is currently running on a standalone HP workstation).

- Budget $400, not including drives.

What parts do you recommend be this used? I’m mainly stuck on motherboard and cpu.

Watched/read many videos and posts. Seems N100, i3-n305, i3-12100 are very popular.

Any assistance is greatly appreciated.

Thanks,

Joe

I bought a Supermicro X11SCA-F used on eBay. I don’t mind that it didn’t come with the sata cables, but it also didn’t come with the m.2 holder. Do I need to buy one separately now or will it be fine without a holder?

Will the X11SCA-F be mounted horizontally of vertically? If the board is laid flat it won’t matter at all. If mounted on edge (more likely) in a standard chassis, I would suspect that vibration could aid gravity and potentially allow the card to become dislodged.

Welcome to Serverbuilds!

The Intel Xeon Scalable server platform is a very different beast than the consumer focused LGA 1151 platform, they have basically opposite design goals. The server platform is designed to scale to a much larger number of users / threads, like a server on the internet serving thousands of users at once, but it does so at the cost of single threaded performance which is what you are going to want in a home server situation. It also will draw more power, resulting in more heat and noise, and finally it will lack any and all creature comforts such as QSV or an iGPU at all.

Long story short I highly recommend that you stick with the X11SCA-F that you have, you can easily drop a 10 Gbps network card in it and be good to go.

The “Best” CPU depends on what your requirements are. The CPU with the most performance will be one of the 8 Xeons like the E-2278G or E-2288G. However they are typically quite expensive.

I would be careful about any in-expensive E2278G or similar CPU that you find. Make sure that it is not a “Quality Control Sample” (QS) or especially an “Engineering Sample” (ES). These are not production ready CPUs. These are used internally by Intel during the development process; they may not have all their cores active, probably won’t hit the same speeds a production chip, and may have certain features that are disabled or non-functional. Get a picture of the actual CPU and don’t buy anything that says “Intel Confidential” on it, it won’t perform like a production product.

I would recommend getting a Core i3 like an i3-8300 or i3-9300 and only upgrade to a Xeon if you find you are running into actual CPU bottle necks or want to run a large number of VMs at the same time. Both the Core i3 and the Xeon should support up to 128 GB of ECC Unbuffered Dimms (UDIMM).

Hi All,

Just wanted to write to thank everyone on this forum for the time and effort they put in and all the amazing information here.

When I started about a year ago I really had no idea what I was doing around server hardware. I had been running a 70TB Windows 10 “NAS” with StableBit DrivePool and SnapRaid for about 8 years without problem but I knew that I needed / wanted something more robust and with better performance.

Based on everything I read here and on Reddit, I’d determine that I wanted a completely new server (I’m using the old one as backup now) with the following criteria: 1) capacity, 2) resilience, 3) performance. However my other big goal was to keep the power draw to an absolute minimum (but yes, there are 12 spinning rust drives… SSDs just aren’t cheap enough / big enough yet).

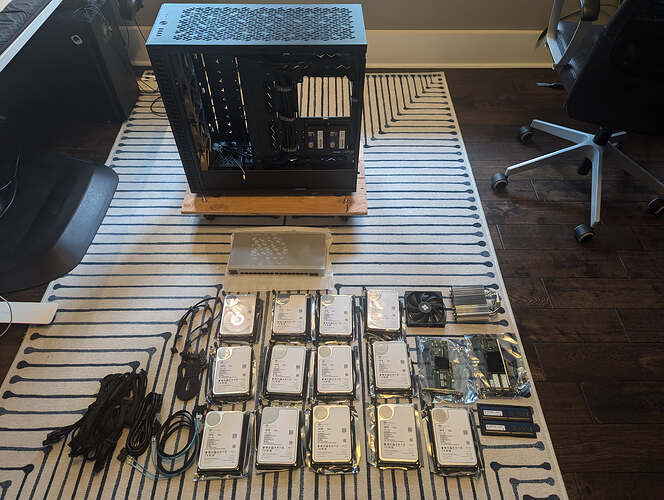

After a -lot- of reading, I ended up mostly following a modification of the NAS Killer 6.0 template and ended up buying:

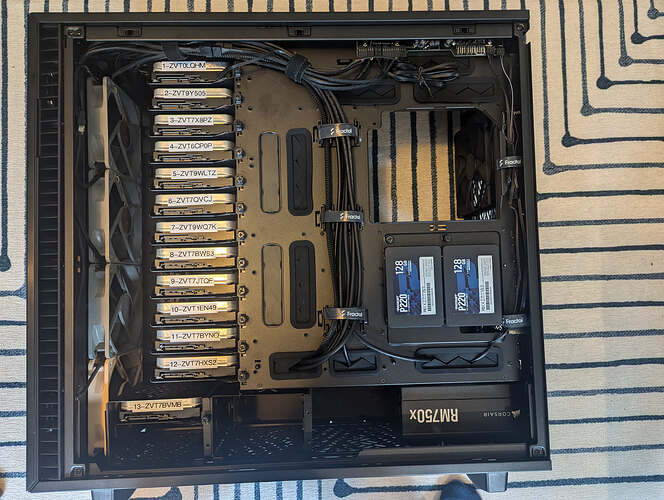

- Fractal Design 7XL (Amazon) - converted to storage mode, the most expensive thing I bought beside the drives, its a great case with lots of space and I didn’t have the closet space to put in a rack. None of the recommended cases where either big enough or available in Canada. Easily the best case I’ve ever owned though.

- Corsair RM750x (Amazon) - I really wanted to a high quality efficient power supply, I couldn’t find any Corsair RM series with lower wattage for a reasonable price and couldn’t find any used. Again pricey but important to me.

- Supermicro X11SCA-F (eBay) - From China seller that Ian recommended here. Bought it mostly for the 2x M.2 slots but actually didn’t end up using them but I like the IPMI access. Might use the M.2 for cache or a fast pool later.

- Intel Core i3-9100 (eBay) - Wanted ECC RAM support and this was plenty of power for my NAS

- A-Tech 2x 32GB ECC PC4-21300 DDR4 (Amazon) - Yes DDR4 is cheap, no ECC DDR4 is not cheap especially UDIMMs. This was a tough purchase because A-Tech isn’t a great brand name but finding other ECC RAM that I was sure would work was difficult and I wanted a good return policy in case it didn’t work

- LSI HBA 9211-8i (eBay) - Great HBA, easy to flash, have one in my other build. Enough said.

- Dell X710-DA2 NIC (eBay) - I wanted a SFP+ 10GB NIC and I also wanted it to get to the system to the lowest possible C state I could for efficiency

- 2x Patriot P220 128GB SSDs (Amazon) - Smallest, cheapest boot disks I could find but wanted them mirrored

- 13x 18TB Seagate EXOS X20s (ServerPartDeals) - More than double the cost of everything else in my build but I wanted the space. 12 drives in 2 RAIDZ2 VDEVs plus one spare.

- Plus a few misc Noctua fans, SATA power splitters all new

Yes I could have saved some more by buying more stuff used but several items weren’t available in Canada or had insane shipping costs plus reliability was key for me and new is usually more reliable.

Everything went together beautifully. The only problem I had was not realizing that the Supermicro only posts in VGA without a BIOS setting (and I didn’t know the IPMI password). After a fair bit of frustration with this (had to buy a VGA cable) everything came together beautifully.

I decided for performance reasons to use TrueNAS instead of Unraid. Cost was a factor but mostly I figured I could get a performance bump with TrueNAS and I do move a lot of big files around my network. So two VDEVs of 6 drives each in RAIDZ2.

With all drives spinning the NAS idles at around 90watts and maxes out at about 150 which is about 40watts lower than my old Windows machine. Unfortunately the HBA limits the C-states that TrueNAS can get to but from extensive reading there doesn’t seem to be a reasonable alternative.

The NAS has been running perfectly with absolutely no problems since August 2024. I did have one drive throw some errors but the RMA process through ServerPartDeals was insanely smooth (and I’d bought a spare so almost no stress!).

Along with this NAS, I’m running three Lenovo m920q’s for firewall, plex, and VMs based on what I learned here.

TLDR; Thank you all for everything on this site. So much helpful information and knowledge. I couldn’t have done it without you. Especially wanted to call out @Ian for all his super insightful posts. You guys rock!

Just finished building a NK6. Problem is, the Supermicro X11SCA-F MB lets out a long constant beep when booting. Weirdly, the beep does not occur when doing a cold boot (PSU switch), only when powering on using the front panel button. The beep eventually turns off while the unraid os is loading.

The MB manual says this beep is an overheat warning, which makes no sense because the system is certainly not hot or even warm when the beep occurs.

All of my drives are recognized, the fans all work, and the cpu and ram have no errors (using memtest86). Cpu is not too hot either. The HBA has a fan strapped to it so I do not think that is the issue either.

hardware: I5-8500T, Supermicro X11SCA-F, Thermaltake Smart 600W PSU, Adaptec ASR-78165

I have tried booting without drives attached, without adaptec card attached, isolated RAM slots, verified front panel pins, made sure both PSU cables are securely attached to the MB. Not sure what else this could be and especially confused because the beep only occurs during a normal boot, not from a cold boot.

It will be vertical (on edge). I just got the card in and fortunately it seems the plastic securing mechanism fits 2280 perfectly and should work just fine, thanks!

Unfortunately, like an idiot, I must not have looked carefully at my CPU when it arrived because now that it is installed with the heatsink over it, my supermicro board is telling me the CPU is an i5-9400f instead of the i5-8500t. If anyone can offer me advice I’d appreciate it as I’m unsure whether this could be a small, medium, or big deal. Fortunately I’m currently going to be running my Plex server from an HP290, so I don’t need the iGPU for that. But I like the flexibility of using an iGPU and was thinking about doing other things with it. Should I pursue the seller over this? Or is the 9400f somehow actually better for me?

You’ve got a faster cpu, but it lacks an igpu. If that igpu is important to you and/or you feel strongly about getting exactly what you ordered, which is very reasonable imo, contact the seller. If you’re happy with a faster cpu and don’t mind having not received what you ordered, then, keep it I guess.

Would the thermal paste clean off well enough to be returnable? I’ve never removed a heatsink before.

Turns out the thermal paste will stay on. Maybe I’m just not familiar with IPMI and refreshing data, but today when I booted the server back up it is now identifying the CPU as the proper i5-8500T so I’m guessing my supermicro mobo had a 9400F in it before it was sold to me and it took time to refresh the data in IPMI (if anyone knows how this works I would love to hear the full explanation). Thanks for all the help!

Hey all,

I stumbled across this thread and now it has me curious.

I am currently running a Dell PowerEdge R810 with quad Intel Xeon E7-4870 cpu’s. Attached to a Dell PowerVault MD1000. I was looking into maybe upgrading or changing things around to help with Power Consumption.

Would going with a build similar to the 6.0 be an upgrade to what I am currently running or would it actually be a down grade?

Thanks for any input I receive.

Hi Ian - thank you for your detailed response. A couple of further questions:

- I purchased an i3-9300 CPU based on your recommendation that it would support 128GB RAM. Since Intel only specifies a max of 64GB for this CPU, do you have experience with more RAM?

- I also purchased a Dell X710-DA2 NIC 10GB LAN card. With the X11SCA-F motherboard, is it possible to “bond” the two ports? And does it make sense with 1GB service? (Bits and Bytes)

I’m doing essentially the same system as you have described in your post. Apart from using a JONSBO N5 chassis (I have a Fractal 7XL as my backup system with 15 mixed HDD/SDD/NVMe), most everything is virtually the same on the NAS I’m constructing as you have described: X11SCA-F, LSI HBA, Dell X710-DA2, ATech ECC UDIMM PC4-25600 DDR4 (hope the speed upgrade is not an issue), six 18TB Seagate IRONWOLF PRO, (2) 500GB SSD, Corsair RM650. I’ve also added (2) 16GB Optane NVMe and (1) HP 2TB NVMe (see below).

I am a PC “expert” but a NAS neophyte. Nonetheless, I’m also planning on using TrueNAS Electric Eel. And therein lies the reason for my post:

- I’m building the NAS initially as a PLEX server. At a later date, I plan to have it act as a Web Server as well, but that is a few years away. (SLOG delay, perhaps)

- I noticed that you did not detail whether you are using any of the following VDEV’s in your system: L2ARC (my 2TB NVMe); mirrored SLOG (not critical for high read-focused file servers - but I plan to mirror the two 500GB SSD for this purpose, especially when I add the WEb Server); and mirrored Special VDEV for metadata (mirroring the two 16GB NVMe - will have to get an NVMe expansion card).

- Did you Bond your (2)10Gb ports? Since I only have a 1GB symmetrical fiber optic ISP (upgradable to 2GB), is there any point? Do you use RJ45 SPF or optical?

I apologize in advance if any of these questions are ridiculous. I just keep plodding along.

Finally - is what I am doing overkill?

Hey! Glad my wall of text helped someone!

I’m a PC “expert” too but not a server guy at all though I have done a lot of reading here, Reddit, and various other forums. So take anything I say with a grain of neophyte salt.

You’ll be fine with faster memory than what the motherboard supports. The memory will run just fine at a lower speed.

- Mine too is a primarily a Plex build. For me, I decided to get a Lenovo m920q, Proxmox, and a Plex LXC container to keep my NAS separate from Plex itself. I like the idea of keeping the NAS as a NAS and not over complicating it by adding containers. Personal preference probably. I also have a another m920q for all my other docker containers (web server, *arr stack, etc.).

- After doing a bunch of reading and watching I decided not to add any other special VDEVs. This video was helpful but there was another one on the TrueNAS site that really helped but I can’t find it any more unfortunately. You can add special VDEVs later if you think it will help but 6 months in I still don’t think I need them (plus they are another potential pool failure point).

- Nope I didn’t. I upgraded my network to 10gb/2.5gb at the same time which was a huge throughput increase over the old 1gb network. Getting 8x-ish the copy speed for big media files is amazing. You’ll want to look into IOPS vs. Throughput (probably the best thing I read on pool layouts). I’m really glad I went with two vDEVs vs. one even though I lost some capacity because IOPS really do matter for overall performance. I think the biggest increase to performance for my current system would be to add another VDEV honestly (which will have to wait until I win the lottery!).

For me with a similar system, it wasn’t overkill at all. I have about 35 people on my plex server (at varying levels of usage) and everything is working perfectly. I’m really happy with it from a performance and stability perspective. I’ll hopefully never have to touch it for 10+ years.

Hope that helps!

Has anyone successfully installed an M2 NVMe extender card on the X11SCA-F? I would like to add 4 additional NVMes. Note that I already have an LSI HBA installed.